GANs for Data Augmentation: A US Researcher’s Guide

US researchers can utilize Generative Adversarial Networks (GANs) for data augmentation in limited-data scenarios by training GANs to generate synthetic data points that resemble the original dataset, thus expanding the dataset and improving the performance of machine learning models.

In the realm of artificial intelligence, data is king. However, **how can US researchers utilize Generative Adversarial Networks (GANs) for data augmentation in limited-data scenarios**? This article explores the possibilities and practical applications of GANs in overcoming data scarcity challenges.

Generative Adversarial Networks: An Overview for US Researchers

Generative Adversarial Networks (GANs) have emerged as a powerful tool in the field of artificial intelligence, particularly for tasks involving data generation and augmentation. For US researchers facing the challenge of limited datasets, GANs offer a promising solution to enhance the training and performance of machine learning models.

This section provides an overview of GANs, highlighting their architecture, functionality, and potential benefits for data augmentation in various research domains.

Understanding the GAN Architecture

At the heart of a GAN lies a competitive relationship between two neural networks: the generator and the discriminator. The generator aims to create synthetic data that resembles the real data, while the discriminator tries to distinguish between the real and generated data.

This adversarial process drives both networks to improve over time, with the generator producing increasingly realistic data and the discriminator becoming more adept at identifying fakes.

How GANs Facilitate Data Augmentation

Data augmentation involves creating new, synthetic data points from existing data to expand the size and diversity of a dataset. GANs are particularly well-suited for this task, as they can learn the underlying distribution of the real data and generate new samples that capture its essential characteristics.

For US researchers working with limited datasets, GANs can effectively boost the performance of machine learning models by providing them with more training data. This is especially valuable in domains where data collection is expensive, time-consuming, or ethically challenging.

- GANs can generate realistic synthetic data.

- GANs enhance machine learning model performance in data-scarce scenarios.

- GANs learn the underlying data distribution

- GANs offer custom data generation.

In conclusion, GANs provide a robust framework for data augmentation, enabling researchers to overcome the limitations of small datasets and improve the accuracy and reliability of their AI models.

Addressing Limited Data with GANs: Strategies for US Research

The scarcity of data poses a significant hurdle for many AI research projects in the US. GANs offer innovative strategies to mitigate this challenge by generating synthetic data that can effectively augment limited datasets. This section will explore various techniques for leveraging GANs in such scenarios.

By understanding these strategies, US researchers can unlock the full potential of GANs to enhance their research outcomes.

Conditional GANs for Targeted Data Generation

Conditional GANs (cGANs) offer a refined approach to data augmentation by allowing researchers to control the characteristics of the generated data. By conditioning the generator and discriminator on specific labels or attributes, cGANs can produce synthetic samples that match particular categories or criteria.

This is particularly useful when researchers need to balance their datasets or generate specific types of data that are underrepresented in the original dataset.

Semi-Supervised Learning with GANs

Semi-supervised learning combines labeled and unlabeled data to train machine learning models. GANs can be integrated into semi-supervised learning frameworks to generate synthetic labels for unlabeled data, effectively expanding the labeled dataset and improving model accuracy.

This approach is especially beneficial when labeled data is scarce but unlabeled data is abundant, as it allows researchers to leverage the information contained in the unlabeled data to enhance model training.

- Conditional GANs enable targeted data generation.

- Semi-supervised learning leverages unlabeled data.

- GANs balance datasets and improve accuracy.

- These strategies are helpful in many limited-data scenarios.

In summary, GANs offer a diverse range of strategies for addressing the challenges of limited data in research. By employing techniques such as conditional GANs and semi-supervised learning, US researchers can effectively augment their datasets and achieve more robust and reliable AI models.

Practical Applications of GANs in US Research Domains

GANs are not merely theoretical constructs; they have a wide range of practical applications across diverse research domains in the US. From medical imaging to cybersecurity, GANs are transforming the way researchers approach data-driven problems. This section highlights some key areas where GANs are making a significant impact.

These examples illustrate the transformative potential of GANs in addressing real-world challenges and driving innovation across various fields.

Medical Imaging: Enhancing Diagnostics with Synthetic Data

In medical imaging, GANs are used to generate synthetic medical images, such as X-rays, MRIs, and CT scans. These synthetic images can be used to augment limited datasets, improve the training of diagnostic models, and protect patient privacy by reducing the need to share sensitive medical data.

By generating realistic medical images, GANs are helping to advance the development of more accurate and reliable diagnostic tools.

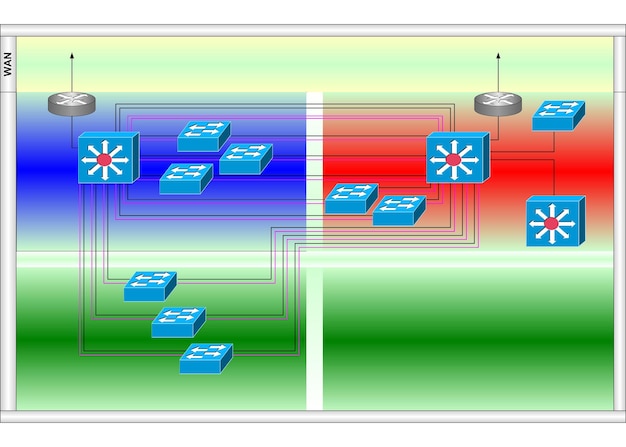

Cybersecurity: Creating Realistic Attack Scenarios

GANs are also being applied in cybersecurity to generate realistic attack scenarios and network traffic data. This synthetic data can be used to train and evaluate intrusion detection systems, identify vulnerabilities in software, and improve the resilience of computer networks.

By simulating real-world attacks, GANs help cybersecurity professionals stay one step ahead of malicious actors and protect critical infrastructure.

Materials Science: Accelerating the Discovery of New Materials

In materials science, GANs are used to generate new material designs and predict the properties of novel materials. By training on existing materials data, GANs can learn the relationships between material structure and properties, and generate new materials with desired characteristics.

- GANs enhance medical diagnostics.

- GANs improve cybersecurity through attack simulation.

- GANs accelerate material discovery.

- GANs are invaluable across various research applications.

In conclusion, GANs are proving to be a versatile tool with broad applicability across various research domains in the US. By generating synthetic data, GANs are helping researchers overcome data limitations, accelerate discovery, and develop more robust and reliable AI systems.

Best Practices for Implementing GANs in Limited-Data Scenarios

While GANs offer great promise for data augmentation, implementing them effectively in limited-data scenarios requires careful planning and execution. This section outlines some best practices to ensure successful GAN training and deployment.

By following these guidelines, US researchers can maximize the benefits of GANs while minimizing potential pitfalls.

Careful Selection of GAN Architecture

The choice of GAN architecture can significantly impact the performance of data augmentation. Simpler GAN architectures, such as the original GAN and Deep Convolutional GAN (DCGAN), may be more suitable for small datasets due to their lower complexity and reduced risk of overfitting.

More complex architectures, such as StyleGAN and ProGAN, may require larger datasets to train effectively. Careful consideration of the dataset size and complexity is essential when selecting a GAN architecture.

Hyperparameter Tuning and Regularization

Hyperparameter tuning and regularization techniques are crucial for preventing overfitting and improving the generalization ability of GANs in limited-data scenarios. Techniques such as dropout, weight decay, and batch normalization can help to stabilize training and reduce the risk of the generator memorizing the training data.

Careful selection of learning rates, batch sizes, and other hyperparameters is also essential for achieving optimal performance.

Evaluation Metrics for GAN Performance

Evaluating the performance of GANs in data augmentation is critical to ensure that the generated data is of high quality and suitable for downstream tasks. Metrics such as Fréchet Inception Distance (FID), Inception Score (IS), and Kernel Maximum Mean Discrepancy (MMD) can be used to assess the similarity between the real and generated data.

- Careful selection of GAN architecture is crucial.

- Hyperparameter tuning prevents overfitting.

- Evaluation metrics ensure data quality.

- These best practices optimize GAN implementation.

In summary, implementing GANs effectively in limited-data scenarios requires careful attention to architecture selection, hyperparameter tuning, and evaluation. By following these best practices, US researchers can leverage GANs to create high-quality synthetic data and enhance the performance of their AI models.

Challenges and Limitations of Using GANs for Data Augmentation

While GANs offer numerous benefits for data augmentation, they also come with certain challenges and limitations. Understanding these issues is crucial for researchers to effectively address them and mitigate potential risks. This section outlines some of the key challenges associated with using GANs in limited-data scenarios.

By being aware of these limitations, the US research community can develop better strategies for GAN deployment and contribute to the advancement of GAN technology.

Training Instability and Mode Collapse

One of the most significant challenges in training GANs is the issue of training instability, where the generator and discriminator oscillate without converging to a stable equilibrium. Mode collapse, a related problem, occurs when the generator produces only a limited variety of samples, failing to capture the full diversity of the real data.

These issues can be exacerbated in limited-data scenarios, where the GAN may struggle to learn the underlying data distribution effectively.

Ensuring Data Quality and Realism

Another challenge is ensuring that the generated data is of sufficient quality and realism to be useful for downstream tasks. If the synthetic data is too dissimilar from the real data, it may degrade the performance of machine learning models trained on the augmented dataset.

Careful evaluation and validation of the generated data are essential to ensure its suitability for the intended application.

Computational Resources and Training Time

Training GANs can be computationally intensive and time-consuming, especially for complex architectures and large datasets. Limited computational resources may pose a barrier to entry for some researchers, and long training times can slow down the research process.

- Training instability and mode collapse persist.

- Ensuring data quality remains a challenge.

- Computational resources can be demanding.

- Addressing limits enhances GAN effectiveness.

In conclusion, while GANs hold great promise for data augmentation, researchers must be aware of their limitations and challenges. Addressing issues such as training instability, data quality, and computational costs is essential for realizing the full potential of GANs in limited-data scenarios.

Future Directions and Innovations in GAN Research

The field of GAN research is rapidly evolving, with new architectures, training techniques, and applications emerging continuously. This section explores some of the exciting future directions and innovations that are likely to shape the future of GAN technology.

By staying abreast of these developments, US researchers can position themselves at the forefront of AI innovation and contribute to the advancement of GANs.

Improved Training Techniques and Stability

One of the key areas of ongoing research is the development of improved training techniques and stability. Researchers are exploring new loss functions, regularization methods, and optimization algorithms that can help to stabilize GAN training and reduce the risk of mode collapse.

These advancements promise to make GANs more accessible and reliable for a wider range of applications.

Integration with Other AI Techniques

Another promising direction is the integration of GANs with other AI techniques, such as reinforcement learning, transfer learning, and self-supervised learning. By combining GANs with these methods, researchers can create more powerful and versatile AI systems that can tackle complex problems with limited data.

Explainable GANs and Trustworthy AI

As GANs become more widely used in critical applications, there is a growing need for explainable GANs that can provide insights into how they generate data and make decisions. Explainable GANs can help to build trust in AI systems and ensure that they are used ethically and responsibly.

Researchers are developing new techniques for visualizing and interpreting GANs, such as attention mechanisms and feature attribution methods.

- Training techniques and stability will continue improving.

- Integration with other AI methods will create new powerful AI systems.

- Explainable GANs promote trust.

- These advances enable future innovation.

In summary, the future of GAN research is bright, with many exciting developments on the horizon. By focusing on improved training techniques, integration with other AI methods, and explainable GANs, researchers can unlock the full potential of GANs and create AI systems that are more powerful, reliable, and trustworthy.

| Key Point | Brief Description |

|---|---|

| 💡 GANs Overview | GANs are a powerful tool for data generation and augmentation. |

| 🎯 Strategies | Conditional GANs and semi-supervised learning effectively expand datasets. |

| 🔬 Applications | GANs enhance medical imaging, cybersecurity, and materials science. |

| ✅ Best Practices | Follow architectural selection, tuning, and evaluation guidelines. |

FAQ

▼

GANs are machine learning models consisting of a generator that creates synthetic data and a discriminator that distinguishes real from fake data. They are used for data augmentation and generation.

▼

GANs generate synthetic data that resembles real data, effectively expanding the dataset. This improves the training and performance of machine learning models when data is scarce.

▼

Medical imaging, cybersecurity, and materials science are research areas that benefit from GANs. The technology optimizes diagnostics, enhances security, and accelerates discovery by generating synthetic data.

▼

Training instability, mode collapse, ensuring data quality, and high computational costs are primary limitations. These factors need to be addressed to ensure results are practical and beneficial.

▼

Improved training techniques, integration with other AI methods, and explainable GANs are future advancements. These improvements will offer more dependable and understandable results by using GANs.

Conclusion

In conclusion, Generative Adversarial Networks offer US researchers a powerful tool for data augmentation in limited-data scenarios. By understanding the strategies, best practices, and challenges associated with GANs, researchers can effectively leverage them to enhance their AI models and drive innovation across various research domains.