The Ethics of AI in US Healthcare: Protecting Patient Rights

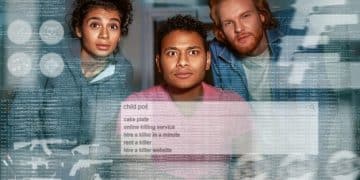

The Ethics of AI in US Healthcare: Are Patient Rights Adequately Protected? explores the ethical implications of using artificial intelligence in healthcare, focusing on whether current regulations and practices sufficiently safeguard patient rights in the face of rapidly advancing AI technologies.

The relentless march of artificial intelligence (AI) into healthcare promises revolutionary advancements, but alongside this technological surge looms a critical question: The Ethics of AI in US Healthcare: Are Patient Rights Adequately Protected? Examining this intersection is now paramount.

The Rise of AI in US Healthcare

Artificial intelligence is rapidly transforming numerous sectors and healthcare is no exception. From diagnostic tools to personalized treatment plans, AI applications are becoming increasingly prevalent in US healthcare. Understanding the scope and impact of this integration is the first step towards addressing the ethical considerations.

The potential benefits of AI in healthcare are vast, including improved accuracy in diagnoses, more efficient resource allocation, and enhanced patient outcomes. However, the introduction of AI also brings forth complex ethical dilemmas that require careful consideration and proactive solutions.

Current Applications of AI in Healthcare

AI’s integration into healthcare covers a range of applications, each with its own set of ethical considerations. It’s important to understand these applications to fully grasp the potential impact on patient rights. Here are some examples:

- Diagnostic Tools: AI algorithms analyze medical images (X-rays, MRIs) to detect diseases earlier and more accurately.

- Personalized Treatment Plans: AI uses patient data to tailor treatment plans, optimizing medication and therapies for individual needs.

- Drug Discovery: AI accelerates the drug discovery process by analyzing vast datasets to identify potential drug candidates.

- Robotic Surgery: AI-powered robots assist surgeons, enhancing precision and reducing recovery times.

These applications demonstrate the potential for improving healthcare delivery, but also highlight areas where ethical oversight is crucial to ensure patient well-being. The question remains, are these implementations safe?

In conclusion, the rise of AI in US healthcare presents both unparalleled opportunities and complex ethical challenges. By understanding the current applications and potential impacts, we can begin to address the critical question of whether patient rights are adequately protected in this technological revolution.

Understanding Patient Rights in the Digital Age

The digital age brings new challenges to patient rights, as AI systems collect, analyze, and use sensitive medical information. Defining and understanding these rights is crucial to safeguarding patient interests. This section outlines key patient rights that are particularly relevant in the context of AI in healthcare.

Protecting patient rights in the digital age requires a multi-faceted approach, including legal frameworks, ethical guidelines, and technological safeguards. However is all this effective?

Key Patient Rights in Healthcare

Several key patient rights are foundational to ensuring ethical healthcare practices. These rights must be upheld and adapted to the evolving landscape of AI in healthcare:

- Informed Consent: Patients have the right to be fully informed about their treatment options, including the use of AI-driven tools, and to provide voluntary consent.

- Privacy and Confidentiality: Patients have the right to privacy and confidentiality of their medical information, ensuring that AI systems adhere to strict data protection protocols.

- Transparency and Explainability: Patients have the right to understand how AI systems are used in their care, including the algorithms’ decision-making processes.

- Data Security: Patients have the right to have their medical data securely stored and protected from unauthorized access or breaches.

These rights form the bedrock of ethical patient care, providing a framework for addressing the unique challenges posed by AI in healthcare. Are these rights enough though?

In conclusion, understanding and upholding patient rights in the digital age is essential for ensuring that AI in healthcare is used ethically and responsibly. By focusing on informed consent, privacy, transparency, and data security, we can protect patient interests in this rapidly evolving field.

Data Privacy and Security Concerns

Data privacy and security are paramount concerns in the age of AI in healthcare. AI systems rely on vast amounts of patient data, raising significant questions about how this information is collected, stored, and used. Addressing these concerns is crucial to maintaining patient trust and ensuring ethical practices.

The increasing reliance on AI in healthcare necessitates robust data protection measures and adherence to ethical guidelines. However, these measures can only go so far.

Potential Risks to Data Privacy and Security

Several risks exist regarding data privacy and security in the context of AI in healthcare. These risks must be addressed proactively to prevent breaches and protect patient data:

- Data Breaches: Healthcare data is highly valuable, making it a prime target for cyberattacks. Data breaches can expose sensitive patient information, leading to identity theft and other harms.

- Unauthorized Access: Insufficient access controls can allow unauthorized individuals to access patient data, compromising privacy and confidentiality.

- Data Misuse: Data can be misused for purposes other than direct patient care, such as targeted advertising or discriminatory practices.

These risks highlight the importance of implementing comprehensive data protection strategies and enforcing strict ethical standards. How effective are these strategies?

In conclusion, data privacy and security are critical concerns in the AI-driven healthcare landscape. By addressing the potential risks and implementing robust data protection measures, we can safeguard patient information and maintain trust in this rapidly evolving field.

Bias and Discrimination in AI Algorithms

Bias and discrimination in AI algorithms pose a significant ethical challenge in healthcare. AI systems are trained on data, and if that data reflects existing biases, the algorithms can perpetuate and amplify those biases, leading to unfair or discriminatory outcomes for certain patient groups.

Addressing bias and discrimination in AI algorithms requires a proactive and multi-faceted approach. However, these measures are not always effective.

Sources of Bias in AI Healthcare Algorithms

Several sources of bias can affect AI algorithms used in healthcare, compromising their accuracy and fairness:

- Data Bias: Training data may not accurately represent all patient populations, leading to skewed results for underrepresented groups.

- Algorithmic Bias: The design of the algorithms themselves can introduce bias, favoring certain outcomes or patient characteristics.

- Human Bias: Human biases in data collection and labeling can inadvertently influence the AI’s decision-making process.

Understanding these sources of bias is crucial for developing strategies to mitigate their impact and ensure equitable healthcare outcomes. Can this be truly achieved?

In conclusion, bias and discrimination in AI algorithms represent a significant ethical challenge in healthcare. By identifying the sources of bias and implementing mitigation strategies, we can work towards creating AI systems that are fair, accurate, and beneficial for all patients.

Liability and Accountability in AI-Driven Decisions

Liability and accountability are critical considerations in AI-driven healthcare decisions. When AI systems make recommendations or guide treatment plans, it’s essential to determine who is responsible when errors occur or patients are harmed. Establishing clear lines of accountability is vital for maintaining patient trust and ensuring ethical practices.

The complexity of AI systems and the shared responsibility between developers, healthcare providers, and regulatory bodies necessitate careful consideration of liability issues. But how?

Determining Responsibility in AI Healthcare

Several factors influence the determination of responsibility in cases involving AI errors or harm in healthcare:

- AI System Design: The design and validation of the AI system play a significant role. Developers must ensure that the system is thoroughly tested and performs as intended.

- Healthcare Provider Oversight: Healthcare providers have a responsibility to review and validate AI-driven recommendations, exercising their clinical judgment in patient care.

- Regulatory Framework: Clear regulatory guidelines are needed to ensure that AI systems meet safety and efficacy standards, and to define liability in cases of harm.

Navigating the complex landscape of liability in AI healthcare requires a collaborative effort between developers, providers, and regulatory bodies. But can the collaboration be achieved?

In conclusion, liability and accountability are crucial considerations in AI-driven healthcare decisions. By establishing clear lines of responsibility and fostering collaboration, we can ensure that AI systems are used ethically and safely, protecting patient interests and maintaining trust in healthcare.

The Role of Regulations and Ethical Guidelines

The role of regulations and ethical guidelines is central to ensuring the responsible use of AI in healthcare. Clear and comprehensive regulations are needed to govern the development, deployment, and oversight of AI systems, safeguarding patient rights and promoting ethical practices. Ethical guidelines provide a framework for addressing complex ethical dilemmas and ensuring that AI is used in a way that aligns with societal values.

Effective regulations and ethical guidelines must be adaptable to the rapidly evolving AI landscape, incorporating new technologies and addressing emerging ethical challenges. But how adaptive are these guidelines?

Current and Proposed Regulations

Several current and proposed regulations aim to address the ethical and legal issues raised by AI in healthcare:

- HIPAA Compliance: The Health Insurance Portability and Accountability Act (HIPAA) sets standards for protecting patient privacy and security, which must be upheld in AI-driven healthcare applications.

- FDA Oversight: The Food and Drug Administration (FDA) regulates AI-based medical devices and diagnostic tools, ensuring their safety and efficacy.

- Proposed AI Legislation: Several legislative initiatives are underway to establish comprehensive regulations for AI, addressing issues such as bias, transparency, and accountability.

These regulations and proposed legislation represent a crucial step towards ensuring the responsible use of AI in healthcare. Nonetheless, is it enough?

In conclusion, regulations and ethical guidelines play a vital role in ensuring the responsible use of AI in healthcare. By establishing clear standards and promoting ethical practices, we can harness the benefits of AI while protecting patient rights and maintaining trust in the healthcare system. But do you trust it?

| Key Area | Brief Description |

|---|---|

| 🛡️ Patient Rights | Ensuring informed consent, privacy, and transparency in AI-driven decisions. |

| 🔒 Data Security | Protecting patient data from breaches and unauthorized access. |

| ⚖️ Algorithmic Bias | Mitigating bias in AI algorithms to ensure equitable outcomes. |

| 🧑⚖️ Accountability | Establishing clear responsibility for AI-driven healthcare decisions. |

Frequently Asked Questions

▼

AI systems use large amounts of patient data, raising concerns about data breaches and unauthorized access. Robust data protection measures and strict adherence to privacy regulations like HIPAA are essential to mitigate these risks.

▼

AI algorithms can perpetuate and amplify biases present in the data they are trained on, leading to unfair or discriminatory outcomes for certain patient groups. Careful data curation and algorithm design are necessary to mitigate these biases.

▼

Responsibility can be shared between AI developers, healthcare providers, and regulatory bodies. Clear guidelines and oversight are needed to ensure that AI systems are used safely and ethically, and to determine liability in cases of harm.

▼

HIPAA sets standards for patient privacy, and the FDA regulates AI-based medical devices. Additional legislation is being proposed to address issues like bias and accountability, creating a comprehensive regulatory framework for AI in healthcare.

▼

Patients should be fully informed about the use of AI in their treatment, provide informed consent, and have access to clear explanations of how AI systems are used in their care. Protecting data and asking questions are ways to mitigate this risk.

Conclusion

In conclusion, the ethics of AI in US healthcare require a comprehensive approach to data privacy, algorithmic bias, and accountability. Regulations and ethical guidelines must evolve alongside AI technologies to ensure patient rights are protected, fostering trust in healthcare.