AI in Warfare: Navigating Ethical Boundaries for the US Military

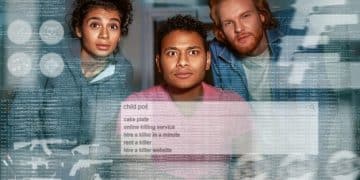

The Use of AI in Warfare: What Are the Ethical Boundaries for the US Military? This exploration delves into defining ethical limits for the U.S. military when deploying artificial intelligence, addressing concerns of bias, accountability, and unintended consequences on the battlefield.

The integration of artificial intelligence into military operations is rapidly changing the landscape of warfare. But when it comes to The Use of AI in Warfare: What Are the Ethical Boundaries for the US Military? This crucial question demands careful consideration as AI systems become increasingly sophisticated and autonomous.

The Rise of AI in Military Applications

Artificial intelligence is no longer a futuristic concept; it’s actively being implemented across various sectors, including the military. From enhancing strategic decision-making to automating target recognition, AI offers unprecedented capabilities. Understanding how far is too far is critical.

The potential benefits are undeniable. AI can process vast amounts of data far more quickly and accurately than humans, leading to faster and more informed decisions. It can also automate dangerous or repetitive tasks, freeing up soldiers to focus on more complex missions.

Key Areas of AI Implementation in the Military

- Intelligence Gathering and Analysis: AI algorithms can sift through massive datasets to identify patterns and predict enemy movements, providing valuable intelligence to military commanders.

- Autonomous Weapons Systems (AWS): These AI-powered weapons can select and engage targets without human intervention, raising serious ethical questions about accountability and the potential for unintended consequences.

- Logistics and Supply Chain Management: AI can optimize the delivery of supplies and equipment to troops on the front lines, ensuring that they have the resources they need when they need them.

- Cyber Warfare: AI can be used to defend against cyberattacks, identify vulnerabilities in enemy systems, and launch offensive operations in cyberspace.

The integration of AI is clearly transformative, but the central question remains: At what point do the advantages outweigh the inherent risks and ethical implications?

Defining Ethical Boundaries: A Complex Challenge

Establishing clear ethical boundaries for the use of AI in warfare is a complex undertaking. It requires careful consideration of various factors, including international law, military doctrine, and societal values. Finding a consensus is proving to be difficult.

One of the biggest challenges is determining the appropriate level of human control. Should AI systems be allowed to make life-or-death decisions without human intervention? Or should humans always be in the loop, providing oversight and ensuring that AI systems are used in accordance with ethical principles?

The Role of International Law

Existing international law, such as the Geneva Conventions, provides some guidance on the use of weapons in armed conflict. However, these laws were written long before the advent of AI, and their applicability to autonomous weapons systems is unclear.

Some experts argue that current laws are sufficient to regulate the use of AI in warfare, while others believe that new treaties or agreements are needed to address the unique challenges posed by this technology.

The Need for Transparency and Accountability

Transparency and accountability are essential for ensuring that AI systems are used ethically. The public has a right to know how these systems are being used and what safeguards are in place to prevent unintended consequences.

Furthermore, it is crucial to establish clear lines of accountability for the actions of AI systems. If an autonomous weapon system makes a mistake and causes harm to civilians, who is responsible? The programmer? The military commander? The manufacturer?

- Establish clear protocols: Create guidelines for AI deployment that align with international laws and ethical standards.

- Implement rigorous testing: Ensure AI systems undergo thorough testing and evaluation before field deployment.

- Prioritize human oversight: Maintain human control over critical decisions, especially those involving lethal force.

By addressing these ethical concerns proactively, the U.S. military can harness the power of AI while upholding its commitment to human rights and the laws of war.

Potential Risks and Unintended Consequences

Using AI in warfare introduces significant risks, including bias, lack of transparency, and the potential for unintended consequences. These risks must be carefully analyzed and mitigated to ensure ethical deployment. Consideration of all the angles is a must.

One major concern revolves around algorithmic bias. AI systems are trained on data, and if that data reflects existing biases, the AI system will likely perpetuate those biases. In a military context, this could lead to discriminatory targeting or other unfair practices.

The Black Box Problem

Many AI systems, particularly those based on deep learning, are “black boxes.” This means that it is often difficult or impossible to understand how they make decisions. This lack of transparency makes it difficult to identify and correct biases or other flaws in the system.

The potential for unintended consequences is another significant concern. AI systems can behave in unexpected ways, especially in complex or unpredictable environments. A seemingly minor programming error could have catastrophic results on the battlefield.

The Human Element: Maintaining Control and Oversight

Even with advanced AI systems, maintaining a strong human element in warfare is vital. Human oversight and control ensure ethical considerations are not overshadowed by technological capabilities.

This involves ensuring that humans are always in the loop when it comes to critical decisions, particularly those involving the use of lethal force. It also means developing robust protocols for monitoring and evaluating the performance of AI systems and intervening when necessary.

The Importance of Human Judgment

Human judgment is essential for interpreting complex situations, assessing the potential for unintended consequences, and making ethical decisions that AI systems may not be capable of handling. Humans are equipped with empathy, cultural understanding, and moral reasoning – qualities that are difficult to replicate in machines.

AI should be seen as a tool to augment human capabilities, not replace them. By combining the speed and efficiency of AI with the judgment and experience of human soldiers, the U.S. military can achieve superior outcomes while upholding its ethical obligations.

The Future of AI in Warfare: Navigating the Path Forward

The future of AI in warfare is uncertain, but one thing is clear: The U.S. military must proactively address the ethical challenges posed by this technology. This requires a multi-faceted approach that involves collaboration between policymakers, military leaders, technologists, and ethicists. Collaboration will be a key factor.

This proactively involves establishing clear ethical guidelines, investing in research and development of AI systems that are transparent and accountable, and implementing training programs to ensure that soldiers understand the capabilities and limitations of AI.

- Continuous dialogues: Maintain ongoing conversations among stakeholders to update ethical guidelines as AI technology evolves.

- Investing in AI safety research: Prioritize research into AI safety and reliability to reduce the risk of unintended consequences.

- Promote international cooperation: Work with allies to establish common standards and norms for the responsible use of AI in warfare.

By embracing these principles, the U.S. military can harness the power of AI to enhance its warfighting capabilities while upholding its commitment to ethical conduct and the laws of war.

Balancing Innovation and Ethical Responsibility

Finding the right balance between technological innovation and ethical responsibility is the ultimate challenge. The U.S. military must strive to develop and deploy AI systems that are both effective and ethical, avoiding the pitfalls.

This requires a commitment to transparency, accountability, and human oversight. It also means being willing to adapt and refine ethical guidelines as AI technology evolves. Failing to adapt may prove dangerous.

The Imperative of Ethical AI Development

Developing ethical AI systems requires a holistic approach that considers not only the technical aspects of the technology but also the social, cultural, and political implications. It also requires prioritizing human well-being and ensuring that AI systems are used in a way that promotes peace and stability.

By prioritizing ethical considerations and engaging in open and transparent dialogue, the U.S. military can lead the way in shaping the future of AI in warfare. By doing so, they can ensure that AI is used in a way that reflects American values and promotes the long-term security and stability of the world.

| Key Point | Brief Description |

|---|---|

| 🤖 AI Integration | AI is enhancing military operations by improving strategic decision-making and automating tasks. |

| ⚖️ Ethical Boundaries | Defining ethical limits is challenging, involving considerations of international law and human control. |

| ⚠️ Potential Risks | AI systems can introduce risks such as bias, lack of transparency, and unintended consequences. |

| 🤝 Human Oversight | Maintaining human control is crucial for ethical decision-making and preventing misuse of AI. |

Frequently Asked Questions (FAQ)

▼

Autonomous weapons systems are AI-powered weapons that can select and engage targets without human intervention, raising significant ethical and legal concerns.

▼

AI bias can lead to discriminatory targeting and unfair practices, particularly if the AI is trained on biased data. This poses risks to civilian populations and mission integrity.

▼

Transparency allows for better understanding and auditing of AI decision-making processes, ensuring accountability and identifying potential flaws or biases in the system.

▼

International laws, such as the Geneva Conventions, provide a framework but may need updates to specifically address AI. These regulations guide the ethical and legal boundaries of AI in combat.

▼

Human oversight ensures that humans remain in control of critical decisions, providing empathy, cultural understanding, and moral reasoning that AI systems may lack, minimizing errors.

Conclusion

Navigating the ethical boundaries of AI in warfare is crucial for the U.S. military. Balancing technological innovation with ethical responsibility ensures that AI enhances warfighting capabilities while upholding human rights and international laws, paving the way for a future where AI serves humanity’s best interests, even in the complex landscape of military applications.